For those of us who’ve been working with Notes/Domino as long as we can remember, archiving Lotus Notes databases—especially large ones—can be daunting. Teamstudio Export simplifies the process, allowing you to turn Notes/Domino applications into self-contained web sites accessible from any browser. But as databases grow, so do the challenges of efficient archiving. Fortunately, with the application of a few best practices, you can optimize Export’s performance and make archiving even the largest databases manageable.

In this post, we’ll share our top seven strategies for maximizing Teamstudio Export’s archiving performance, making the process faster and smoother.

1. Set Up a Dedicated Export Workstation

A dedicated workstation on the same network as your Domino server minimizes network latency and speeds up Export. Running Export on a virtual machine (VM) local to the target server's network is a great alternative when physical proximity isn’t possible. Avoid using VPNs during the archiving process for large applications, as they can add significant delays. Exporting on-site ensures that data transfer rates remain high and consistent while keeping latency manageable.

Beyond the technical advantages, using a dedicated Export workstation reduces the risk of interruptions from users or connectivity issues—a crucial benefit for archiving large databases. When you’re three-quarters of the way through a multi-day export, a single interruption can mean lost hours or even days if the process needs to restart. By setting up a dedicated machine, you avoid this potential delay, keeping the export process running smoothly and ensuring you meet project timelines without unexpected setbacks. For teams archiving extensive or complex applications, this consistency is key.

2. Minimize Network Issues

Connectivity is key to maintaining steady archiving performance. Small network issues can cause significant delays when archiving large databases. Reduce the impact of network latency, DNS, and routing issues by making local replicas or copies of the applications you plan to archive. This approach is especially useful for older databases with complex structures, as it allows Export to work directly from local files, bypassing network obstacles.

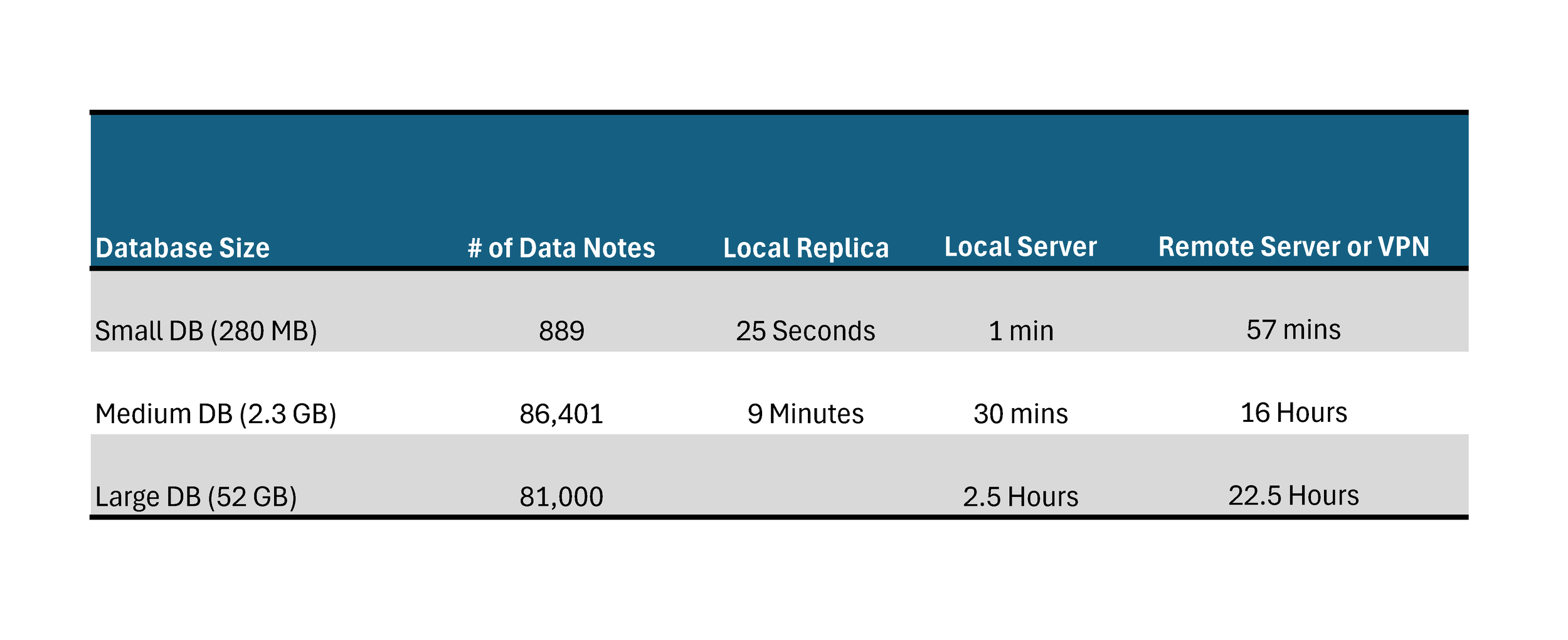

Here are a few examples of what we've seen when generating the same archive under varying network conditions. Ping your target servers, what level of latency do you see?

Here are some example archive processing times. Please note that the number of documents, design complexity, embedded views, and cross-database references can also affect them.

3. Use the Queue!

Not just something shouted at you for committing a social faux pas. Export has built-in support for processing up to three archive operations simultaneously, which maximizes throughput when working with multiple databases. Any additional applications added will enter a queue, ensuring that Export has a continuous workflow without any downtime. If you have a large archiving project, consider setting up multiple Export workstations to handle larger workloads in parallel, again speeding up the overall project timeline.

One of the significant benefits of this queuing approach is its ability to maintain efficiency even when an individual database encounters an error. For example, if a corrupt data note or an unexpected issue halts the processing of one database, Export moves on to the next item in the queue. This ensures a steady stream of progress, reducing idle time and preventing one problematic application from derailing or delaying your archive project. With this setup, you’re not stuck waiting for manual intervention to restart the process—Export keeps working, ensuring maximum use of available resources.

Use the Export queue to boost efficiency.

4. Optimize Storage Performance and Manage Security Software

When configuring Export, set local paths for the archive, PDF, and HTML output folders. Exporting to a network or cloud location can introduce delays, as data transfer rates can vary. By first saving the archive files locally, you reduce the chances of performance bottlenecks. Once the archive is complete, you can manually move the files to a more permanent storage location.

In environments like AWS EC2, storage performance is a factor to consider. Export may write hundreds of thousands of individual files during processing, so storage performance—including IOPS, volume types, and burst rates—may impact processing times. For optimal performance, ensure that your storage configuration is capable of handling your intended workloads.

Additionally, security or antivirus software on the workstation may also contribute to performance degradation. Real-time scanning of every temporary file Export creates can slow down the process considerably. To mitigate this, consider excluding Export’s working directories from real-time scanning or adjusting security software settings to balance performance with security.

5. Reduce Application Bloat for Faster Archiving

Every data note, view, and cross-database reference in your application adds to the processing load during archiving. For large, complex applications, this can mean substantial turnaround time, especially if the archive is intended for reference rather than active use. If the primary goal of your archive is to serve as a “just-in-case” repository for occasional lookup, you may want to consider leaving the data structure largely intact. However, if your use case involves more active integration with other platforms—such as workflows involving data extraction or analysis—streamlining the application to fit its archival purpose can significantly improve archiving efficiency.

For instance, consider whether the 30+ views your production application used are truly necessary for an archive-only format (Don't forget about those hidden views!). By trimming views and ensuring only essential data structures remain, you reduce "bloat" and create a leaner archive that’s faster to generate and easier for end users to navigate. This not only saves time on the initial export but also makes archived data quicker and more efficient to access later, matching your archive’s purpose to its structure.

Do you really need all 267 views for your archived application. Debloat you design.

6. Prepare Applications with Pre-Archive Maintenance

Older or unused applications may lack up-to-date view indexes, which Domino servers may discard. Without these indexes, archiving can become time-intensive, as Export needs to rebuild every view and document within the application. Running basic maintenance tasks like Fixup, Compact, and Updall before archiving can significantly reduce processing time, especially for large databases with numerous views and data notes.

Beyond improving performance, these maintenance tasks can also help identify and resolve potential corruption within the application. Corruption, whether in a document, view, or database structure, can interrupt the archive process, forcing a restart. For large applications that take days to process, this kind of setback can be costly in terms of both time and resources. By addressing corruption proactively, you not only safeguard the integrity of the archive but also ensure smoother, uninterrupted processing—minimizing the risk of having to redo hours or even days of work.

7. Update your Notes Client!

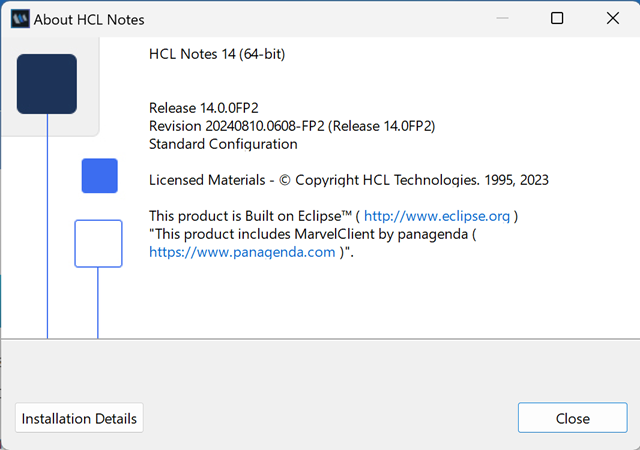

Another consideration is ensuring that the workstation running Export is using the latest version of the Notes client you have available. Even if your Domino servers are running older versions, such as Domino 9, upgrading the Notes client on the system used by Export to a more recent version can help prevent known bugs from affecting data integrity or archiving efficiency. The newer Notes client versions often include fixes and improvements that address legacy issues, ensuring smoother processing of applications and reducing the chances of interruptions caused by outdated software quirks.

Use the latest Notes client available on your Export workstation to limit exposure to legacy issues.

Archiving is a vital part of managing legacy data, and Teamstudio Export helps make it straightforward. However, implementing best practices becomes crucial to ensure efficient, uninterrupted processing times. With a few preparatory steps—dedicated workstations, process queue management, network optimization, local storage use, and database maintenance—you can achieve fast, reliable archives.

Ready to enhance your archiving performance? Try implementing these tips in your next project with Teamstudio Export, and watch the archiving process become simpler, faster, and more efficient. As always, if you have any questions, feel free to reach out—we’re here to help you get the most from your archiving experience.